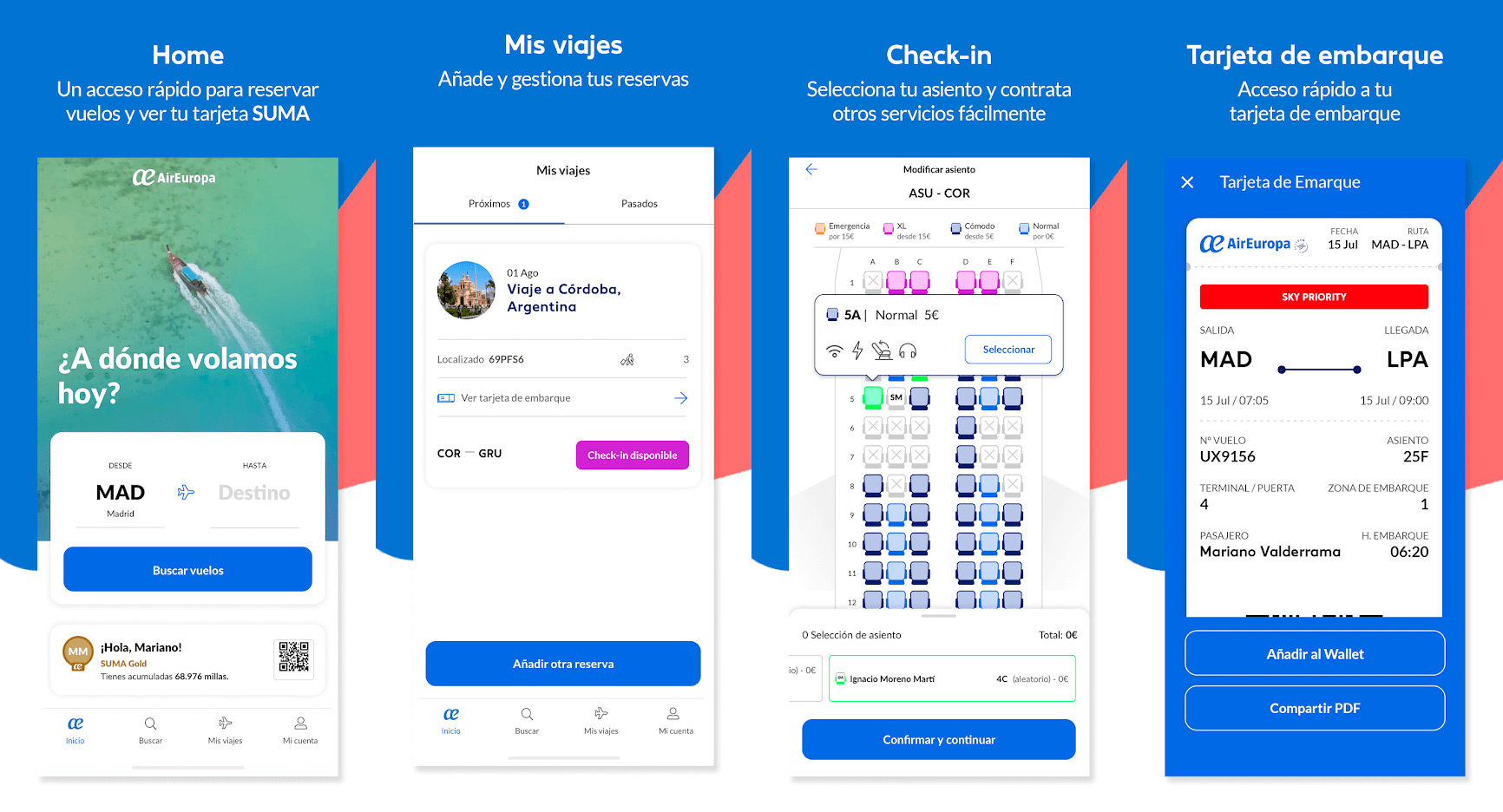

We have finished the renewal of all Air Europa’s digital products in the last few weeks. It is a complex ecosystem: we have a responsive website as well as two apps (Android and iOS), all of them available on 24 markets and in 8 languages, with several transactional flows (ticket sales, check-in, ancillaries services), several payment systems, logged user area… And everything that is part of the operation processes of a company which is running 24 hours a day, 365 days a year.

Although there will be some new changes in the following months, I think the result is good. And if we take into account the available resources and how COVID has affected all our plans when we started the process 18 months ago, I would say that it has been excellent.

It has not been the first time that I have worked on this type of process. They are complex, very different to the usual daily or weekly deployments, where “minor” modifications are made in a system which is currently running. In my opinion, when you launch a new website, there are huge changes (visual design, features, coding languages, server configurations, redirections, etc.) which are activated at the same time… so it adds an extra layer of difficulty.

As is almost always the case, there were hits and misses to learn from. I have checked that when I write a post about a book (Strategize de Roman Pichler), event (UX and Agile at Habitissimo), course (User stories) or any type of professional experience (How to internationalise an ecommerce site), it helps me to internalise what I learnt and remember it better in the future. Additionally, if this post helps someone in any way who reads it, it will be great.

Tests are tests and real life is real life.

Of course, but we forget it.

Nobody wants their product to fail. So everyone carries out tests before launching a new version. Usability test (UX), feature tests, load tests, manual or automatic… to avoid failing on D day. But, in my opinion, the problem is that tests are, precisely, tests. They are done under specific circumstances that they are never comparable to the reality for different reasons:

UX area

Tests in the UX area are basic when you are trying to detect design and interaction problems. They can be face-to-face or remote, moderated or not, with low-fi or hi-fi prototypes… but any usability test is tremendously helpful and saves much time in development tasks because the issues are detected in the early stages. However, due to the essence of the test, it is quite often focused on the perfect happy path, forgetting other possibilities.

Moreover, the prototypes are never 100% real or they will not be implemented in exactly the same way: that drop-down menu which is differently developed because of some technical limitation, that last-minute change because we forgot a user case and we modified the behaviour of the whole feature, etc.

Technology area

What often happens when you launch a product is that there is never enough time to develop all the tasks. And it happens in design, programming… but also in QA. Prioritizing is a must to launch the product to the market as soon as possible. This fact means there will always be more tests to do, automatic tests to be configured and… bugs to be discovered.

Why? Because the technologies and systems are more complex, the behaviour depends on the device (desktop or mobile), the screen size, the browser or operative system, etc. increasing the number of tests.

Additionally, this also happens with UX tests, there are elements which are almost impossible to be certified in a 100% real environment. Good practices say that all systems should be configured exactly equal in the different environments. But the reality is not always the case, due to complexity or expensive issues.

We had issues with the anti-bot system on this occasion. But I have come across this before when I experienced trouble with the load balancer, with any of the numerous servers where the code was not deployed properly and it caused “random” problems, or just issues due to the high amount of traffic.

On other occasions, I have had another kind of issue derived by the user’s geolocation. Some examples are a CDN whose behaviour is mysteriously different according to the country of request, or unbelievable bugs caused by the time difference between two parts of the world.

And we cannot forget that the quality and type of the network connection of the user may transform something which “works perfect in the tests” to “we have an issue in production”. From low speed connections which generate timeouts to more complex and unexpected issues caused by the wi-fi connection in the airports. Curiously, I remember an article which talks about how a mobile videogame company tested their products in the garage of the building to simulate a low quality environment… but I do not think this is the norm.

So… should we forget the tests?

Far from it. Testing always helps you detect problems and make sure that a launch is not a complete disaster, as it ensures that the most frequent use-cases and situations work properly.

User testing should be a constant part of the design process. Guerrilla testing, remote or face-to-face testing… Each and every one of them, with their pros and cons, allows us to detect usability problems before investing time in the development tasks.

There are also specific beta testing services (e.g. Applause) with real users, or tools such as Browser Stack which offer a multitude of devices and browsers that will undoubtedly help in detecting problems when the product is close to being launched.

Additionally, code testing (especially in the case of automated testing) give you the capability to deploy more often… and it can be a new working method to improve the code quality.

But, in my opinion, there is a point where the tests are not going to prevent certain problems. So, as soon as the product is in the hands of real users who can give you feedback (from user experience and technical sides), it will be much better.

“Graveyards are full of brave men” but “In the long run, we are all dead”. Four areas to be considered.

One of my colleagues usually says “Graveyards are full of brave men” (“El cementerio está lleno de valientes” in Spanish) but “In the long run, we are all dead” is a true sentence too. As is almost always the case, we should look for a balance: I do not think we can be a kamikaze and deploy the new version to 100% of our users without a minimum number of tests nor can we wait until all of the issues are solved.

When it comes to making this big launch, our approach has been a mix of four elements:

1. Traffic control: activate the product to a limited segment of users

The best way to keep the roll out under control is to launch the new product with a limited amount of traffic. You can activate the new product in a specific market, for a source of traffic, for users who arrive at a landing page, a small random percentage of traffic. Ideally, you will increase the volume gradually… but your system should enable you to deactivate it instantaneously in case the negative impact is bigger than you can assume.

2. Metrics and sessions replay

Data, as in many aspects, is a great partner. On the one hand, it helps to quantify the impact and severity of the trouble, but to prioritise what problems should be solved in the first place too. On the other hand, you will forget comments such as “it sometimes works”, “it always fails”, or “all clients are complaining about it”. A new era based on objective information will start: “it works 88% of the time but 100% of the Safari users get an error”.

There are several tools to track metrics, in the IT field as well as in the business/UX. From the most common apps used for digital analytics such as Google Analytics, Mixpanel or Amplitude, to more technical such as TrackJS, Kibana, Dynatrace o New Relic. All of them have benefits and drawbacks, but in any case, they provide you with objective information about how the process is working.

I personally think that to have a session replay system is a must, no matter if it is a basic tool (Hotjar or Mouseflow), or a more advanced one (Glassbox, Quantum Metric or Tealeaf). Additionally, the last of them mix replay with the capability of quantifying actions and the analysis of multiple technical parameters. In this way, you will be able to check what the users did exactly to generate the error (page their were on, where they clicked, what value they had typed in the input…) and under which circumstances (device, browser, if they had previous errors…), forgetting the typical “we cannot reproduce it”. Moreover, these type of systems are extremely handy to detect usability issues and answer questions like “why the number of users from the second step of the funnel to the third one has a drop of 10% versus the previous version”

On the other hand, an intriguing option is to do AB Testing and compare the different systems at the same time. However, when it comes to a complete product revamp, my experience tells me that there are a multitude of scenarios where making a 100% reliable comparison is almost impossible.

The last point. If you are going to compare the new data against the previous system, you should have them prepared beforehand. There are always many loose ends to tie up in a launch, and depending on the information, it will be difficult to get it quickly.

3. Contact with Customer Support Team

If you launch the product with a limited amount of traffic, it is difficult for the customer support team to recognise an issue related to the new product between all the usual phone calls and emails. Nevertheless, it is quite interesting to make an effort to filter them out and keep a special channel of communication with focus on the launch between the teams involved.

4. Set aside time to solve issues

There will be issues. Some more important than others, others more complicated to solve, but there will be issues. You should allocate time in your roadmap to make adjustments and solve the problems that arise after the launch. If you are lucky and everything is perfect, you will find extra time that you didn’t count on (a great thing, isn’t it?)

Conclusions

Issues are always present, so I personally think that, instead of avoiding all of the failures, what is more important is the capability to solve them quickly.

And I am surprised when a lot of people believe that a new system cannot fail, it must be perfect at the moment of the launch… whereas an older one with hundreds of errors is running and it is used by the clients day after day without major incident.

All test types are one of the best tools at our disposal. But, in my humble opinion, going all-in with them and not validating new developments in the real world as soon as possible only delays the detection of some problem that, sooner or later, will appear.

10 mayo, 2021 a las 8:44 am

Excellent post ! Thank you for sharing!

10 mayo, 2021 a las 4:00 pm

Thanks Moa 🙂